Given the business climate and local commitments, it is hard for me to be at DAC. But with keen focus on Verification it is kind of important for CVC (www.cvcblr.com) to share our thoughts on fresh ideas/technologies on Verification that are being demo-ed at DAC-2010 (www.dac.com). Leaving the BIG-3 out (I hope to blog about them prior to DAC on what we see as “updates” from them separately), here is a quick round-up of what we see as promising solutions that any DAC attendee in Verification domain might be interested. Feel free to comment via our blog @ www.cvcblr.com/blog – we would love to hear them!

NextOP

One of the most promising start-ups in the assertion based verification domain. They have been in stealth mode for a few years. Only recently quite a bit of information has been let out about their technology. It all started with an eval report from a real user and active follow-ups from then – see: http://www.cvcblr.com/blog/?p=147

Ben Cohen (www.systemverilog.us) recently had some good discussions about this technology based on our DVCon-2010 paper on SVA paper (contact us to get a copy: http://www.cvcblr.com/about_us) It did find some interesting bug via simulation run –> property extraction –> coverage hole –> bug! It is a little long route, but however it is an interesting approach. See details at:http://www.cvcblr.com/blog/?p=163

Make sure you visit their booth @DAC (NextOp exhibits at Booth #1442) to learn more. In a nutshell their technology is about analyzing existing RTL & testbench+testcase (via regression) and extract quality properties for your design – then it is upto the RTL designers to qualify whether these “properties” are assertions/coverage/don’t cares. Their promise is minimal noise, but your mileage may vary!

Vennsa’s OnPoint

If you ask anyone in EDA/Semiconductor industry about the “elephant in the room” problem in front-end VLSI, the answer is loud-n-clear DEBUG! Besides SpringSoft/Novas noone seemed to have the perseverance needed to sail through tough times trying to address that problem. (Remember Veritools, anyone BTW?) Now we have a genuine attempt to automate the debug – Vennsa’s OnPoint. Not much is known yet about it, but here is a picture (Copyright by Vennsa http://www.vennsa.com/ ):

This actually fits very nicely with our Unique workshop on “Debug” (see: www.cvcblr.com/trainings) – wherein we look at some of the common debug problems and demonstrate how little tricks with TCL, GUI/Markers etc. can save you hours if not days!

Look at some of our earlier Tweet’s on OnPoint at www.twitter.com/sricvc to get some more info.

I’m sure we will hear more about it in coming weeks/months.

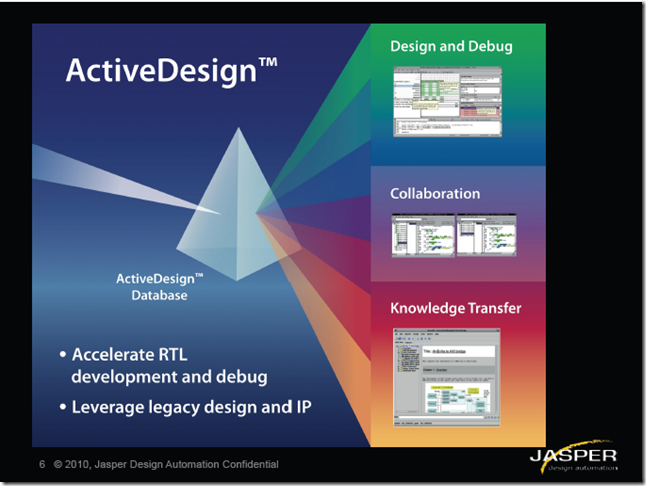

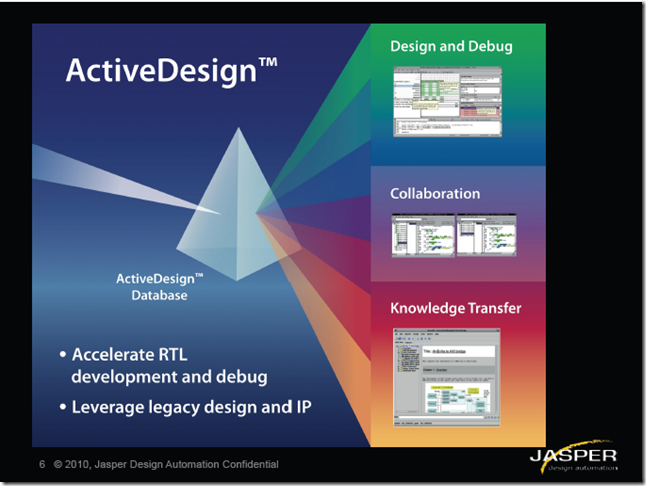

Jasper’s ActiveDesign

One of the most charismatic EDA tools that I’ve come across with so far – that’s if they really deliver on being the “Twitter of RTL Design” expectation that has been set of this. A picture is worth more than…here you go:

Read more about it at: http://www.cvcblr.com/blog/?p=144

Zocalo-tech

Do you care to approach your ABV adoption more methodically? Quoting Harry Foster, all time ABV promoter: (from his invited tutorial entited: “Assertion-Based Verification: Industry Myths to Realities”,

……”what differentiates a successful team from an unsuccessful team is process and adoption of new verification methods. Unsuccessful teams tend to approach development in an ad hoc fashion, while successful teams employ a more mature level of methodology that is systematic”. ……

Now Zocalo is one vendor trying to address that “methodology” aspect of ABV – via their Bird-dog primarily. We looked at their Zazz-OVL and even during today’s SVA training locally (http://www.cvcblr.com/trng_profiles/CVC_LG_SVA_profile.pdf) we were discussing how complex some of the OVL choices could be and I mentioned ZazzOVL – as the Dutch puts it, it is “jammer” (pronounce it as “yammer”, see: http://forum.wordreference.com/showthread.php?t=359560) that we didn’t have the tool handy to show off the value (during the lab session I mean). So make no mistake – their ZazzOVL is very very handy indeed – if you are adding OVLs that’s.

Coming back to their offerings – Bird-dog is a very interesting approach, very much for those assertion enthusiasts who look for “where is the maximum ROI of adding assertions”. Their Visual-SVA is like a “temporal GUI/editor” for complex SVA coding, not my personal cup-of-tea, but I do see value for some there. However generating “traces” for assertions within Visual-SVA is certainly a good attempt. Let’s see how they fair in real life usage! Visit Zocalo Tech Booth # 1509

The all new UVM (a la erstwhile OVM)

Sure you have heard of that – UVM, a sincere effort from Accellera to arrive at a “Universal” methodology from those seemingly competing OVM & VMM. Unless you want to risk your company not paying off your DAC bills, you wouldn’t want to miss that UVM booth :-) Honestly – I believe every one is looking forward to that. As the Accellera PR puts it:

Accellera's DAC breakfast, sponsored by Cadence, Mentor and Synopsys, will feature a standards update with an overview of how the Universal Verification Methodology (UVM) standard supports verification tool interoperability and gives IP and EDA users more choices, and a panel on "UVM: Charting the New Territory." This event continues the celebration of Accellera's 10 years of standards excellence.

For the first time, all 3 major vendors “sponsor” one event promoting ONE methodology – a great news indeed for the users. BTW, there is Aldec catching up on the SystemVerilog support with Riviera-Pro product line. Ask them for VMM/OVM/UVM support updates at: http://www.aldec.com/registration/dac

Agnisys's OVM/UVM management kits

A young EDA company based in Noida, India with solid EDA background (Anupam). They have iDesignSpec & iVerifySpec as products - one is for Register automation and another for overall Verification management. The REG automation has been a long awaited/wished for stuff, almost 8 years back we at Intel used Perl+DOC (Table) for something similar - glad to see a much more finished end product now. It can emit VMM-RAL, OVM and soon perhaps the UVM code too.

Sapient-Inc's IC management

Another young EDA company, according to the founder - Subash, a long time chip designer/manager:

I started Sapient-IC from the pain and frustration of managing IC products. The die size grows, schedule slips, VP yells at everybody. This is what I want address. Analytics for decision makers, comparative analysis for design choices to financial analysis.

Breker’s Trek

A not-so-young EDA company (compared to the likes of NextOp/Zocalo etc.) with some interesting success stories with NVidia, STMicro. Their Trek is certainly a refreshing approach to testcase writing – especially for SoC Verification. See: http://www.cvcblr.com/blog/?p=148 for more details.

RealIntent’s Ascent

So much has been told, written about Linters – yet its adoption has been hampered heavily by the amount of “noise” it creates. Realintent’s Ascent claims to be less on that – and that is their primary seeling point. Not sure how they achieve that – given the natural side-effect of trying “find faults” with any given code.

SpringSoft

Check with them what’s up with their Certess/Certitude – it is an innovative approach for sure – mutation based TB qualification. As much as we have heard locally, there have been success and also some additional “noise”.